Learn to Develop Apps in Docker Containers instead of Local Machine with Nodejs and Express

Table of contents

- Objective 🎯

- Getting Started

- Custom Images with Dockerfile, image Layers & Caching

- Docker networking opening ports

- Dockerignore file

- Syncing source code with bind mounts

- Anonymous Volumes hack

- Read-Only Bind Mounts

- Environment variables and Loading environment variables from a file

- Deleting stale volumes

- Docker Compose

- Development vs Production configs

Objective 🎯

Integrate our express app into a docker container and set up a workflow so that we can develop applications exclusively in docker containers instead of our local machine.

Important Note: 🖋️ These are my notes to Learn Docker - DevOps with Node.js & Express - FreeCodeCamp. Use this as supplementary to the video, not as a standalone tutorial.

.

Getting Started

⏱️ Demo Express App Setup: 0:00:14

Setting up a demo Express App so that we can containerize it for further development.

Custom Images with Dockerfile, image Layers & Caching

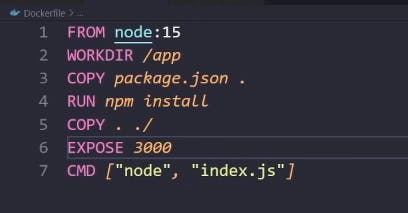

⏱️ Custom Images with Dockerfile: 0:04:18

⏱️ Docker image layers & caching: 0:10:34

FROM node:15

To Create our Custom Image we start by pulling the Node Image. This node image would be our base image and then we would customize it to build our custom image on it.

WORKDIR /app

Then we set our working directory in the container to /app (node already has /app directory within it)

COPY package.json

We copy our package.json from our local machine to the container

RUN npm install

We Run npm install within the container to install the dependencies defined in package.json

COPY . ./

We copy the rest of our files to the container e.g index.js

EXPOSE 3000

It is just to show that we are exposing port 3000 of our container. In reality, this command does nothing. It is just for documentation purposes.

CMD ["node", "index.js"]

We run the index.js to start our express app

💭 Why do we copy package.json first and then the rest of the files?

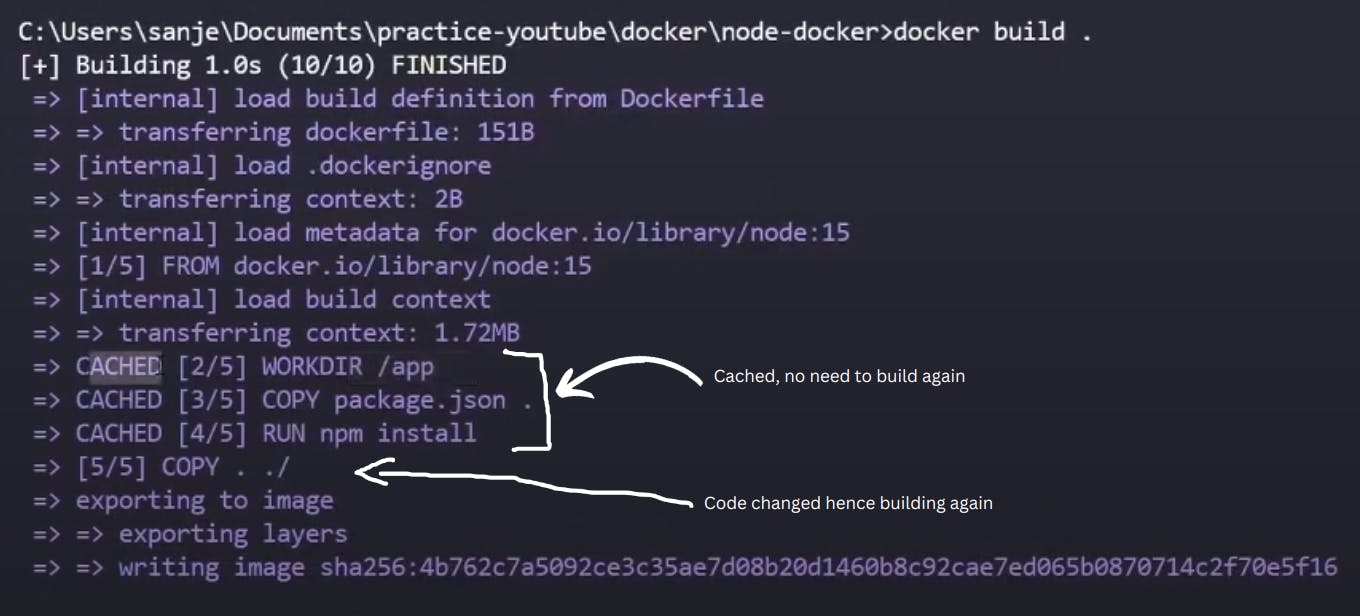

✍️ Each instruction (FROM, COPY, RUN, etc.) is considered a layer in a docker image. Each step in a Dockerfile creates a new “layer” that's essentially a diff of the filesystem changes since the last step. We know that package.json contains dependencies and for most of the projects they won't change. We also know that index.js has our code so it will keep changing as we develop our app.

By copying package.json first and running npm install right after it installs all dependencies and docker then caches this layer. This means that if we rebuild our image, we won't have to wait for dependencies to install as this layer never changed and would be cached! This would save us time. In short, it is an optimization technique.

💭 Why can't we use RUN ["node", "index.js"] instead of CMD ["node", "index.js"]?

✍️ RUN happens at build time (When the image is being built) whereas CMD happens at runtime (When we run the container). We want the express app to start we run the container.

Docker networking opening ports

⏱️ Docker networking opening ports: 0:20:26

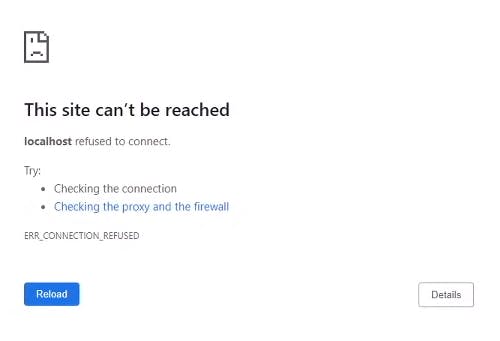

Our express app listens on port 3000. This means when we type localhost:3000 on our browser, we can see the response from the app

But when we containerize our express app and then type localhost:3000, we can't get a response.

💭 Why can't our express app listen to our request?

✍️ By default, docker containers can talk to the outside world ( localhost and the internet) but the outside world (localhost and internet) can't talk to the container. So our request never reaches the container. You can consider it as a security mechanism

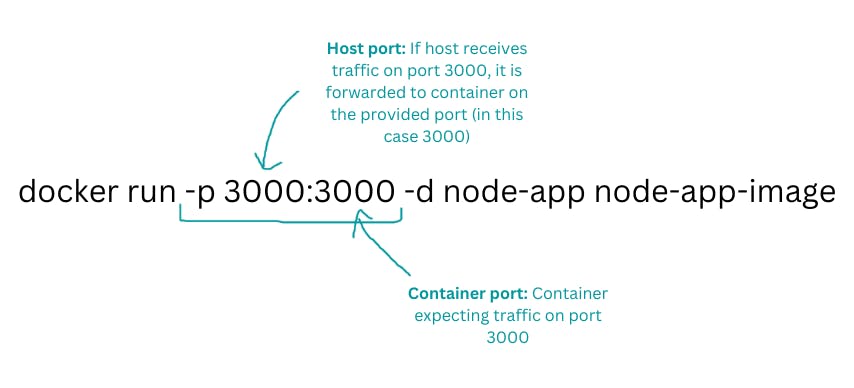

💭 How can we make the outside world talk to the container so that we can send requests to our express app?

✍️ It's simple, we need to open one of the container's ports and bind it with our localhost machine's port. So whenever any request is sent to the local host on that particular port, it is forwarded to the container. To achieve this we can specify -p flag in our docker run command.

Dockerignore file

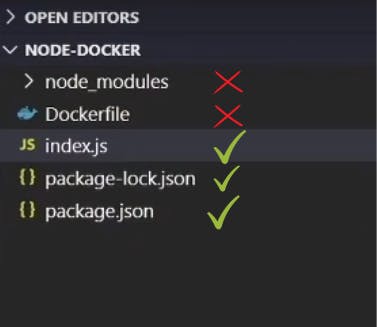

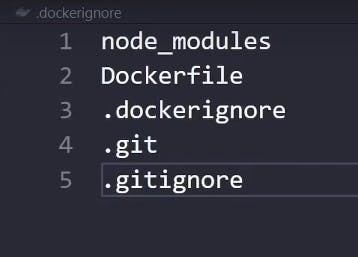

When we do COPY . ./ we copy all the files in our current directory into our container and we obviously don't want to do that. We don't need to copy our node_modules, Dockerfile, and git files. Our container only needs package.json ( to install dependencies) and index.js.

To solve this issue we can create a .dockerignore ( just like .gitignore) and specify files that we don't want to copy.

Syncing source code with bind mounts

⏱️ Syncing source code with bind mounts: 0:31:46

Whenever you change your code in index.js, you need to stop the container, rebuild the image and run the container again so that the changes you made can be reflected in the app. The reason for that is that the code you are working with on the local machine needs to be copied to the container. But it is a very tedious process and not very friendly for development.

The solution for this is Bind Mounts. When you use a bind mount, a file or directory on the host machine is mounted into a container. The file or directory is referenced by its absolute path on the host machine. In simple words, your current directory will be synced to the container. Any changes you make in index.js will be reflected in the container.

You can add the -v flag in the docker run command:

docker run ... -v host-path:container-path ...

Even though the updated index.js will be available to the container, you will still need to restart the node process to see the changes (that's how express works). So install nodemon as dev-dependency

Anonymous Volumes hack

⏱️ Anonymous Volumes hack: 0:45:30

We don't need node_modules folder on our local machine because we are not developing on local machine so we delete it. But a problem arises when we delete node_modules.

As our current directory is synced with the container, the node_modules folder in the container will also be deleted hence our app won't work.

We don't want dockers to touch the node_modules folder of the container. To do this we can do the Anonymous Volumes hack.

We can create an anonymous volume through -v flag in the docker run command:

docker run ... -v /app/node_modules ...

As volumes are based on specificity, the node_modules folder in the container won't be deleted as we have explicitly specified it.

💭 Do we really need to add the COPY command in the Dockerfile when we are already syncing our current directory through bind mounts?

✍️ Absolutely, we still need to add the COPY command. The reason for this is that we are using bind mount to make our development easier but in production, we won't need this bind mount and out image must have the source code to run the application

Read-Only Bind Mounts

⏱️ Read-Only Bind Mounts: 0:51:58 **

Through bind mounts you can delete, create or modify the content in your local machine. This can be useful in cases where you need to save something but we absolutely don't want our containers to mess around in our local machine. Your code could be modified or an important file can be deleted too.

You can easily make the bind mount read-only so that the container can't create or modify your files. To do that just add :ro after the container-path:

docker run ... -v host-path:container-path:to ...

Environment variables and Loading environment variables from a file

⏱️ Environment variables: 0:54:48

⏱️ Loading environment variables from file: 0:59:18

An environment variable is a variable whose value is set outside the program, typically through a functionality built into the operating system or microservice. An environment variable is made up of a name/value pair, and any number may be created and available for reference at a point in time.

During application initialization, these are loaded into process.env and accessed by suffixing the name of the environment variable.

To pass the environment variable in the container you can use the -e flag:

docker run ... -e PORT=4000 ...

or if you bunch of environment variables you can add them in a file and then pass the file to the container:

docker run ... --env-file your-env-file ...

Deleting stale volumes

⏱️ Deleting stale volumes: 1:01:31

Anonymous Volumes are preserved even if we delete the containers that we ran. They will pile up if we don't delete them. We can use the -v flag to delete them.

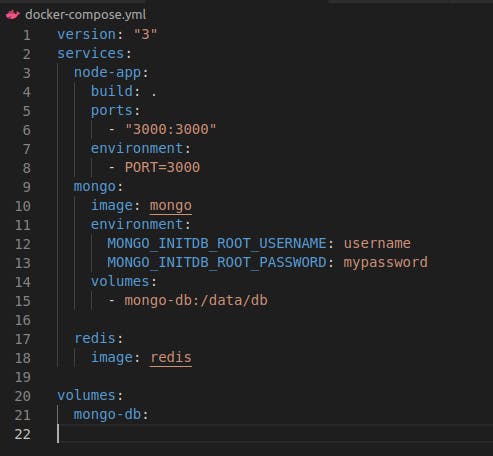

Docker Compose

⏱️ Docker Compose: 1:04:01

Problem:

As we are starting to add a lot of configurations to our container, the docker run commands get too large. It is not a feasible way of running containers.

If we have more than two containers, we would have to start them separately, and set up communications between them manually through docker networks. We can have 100s of containers running so we need to automate the process

Solution

Docker comes here for the rescue. We can configure theoretically 1000s of containers with docker-compose by writing those configurations in YAML. All those containers can be then started and stopped with a single command.

On top of that, we don't even manually need to set up the containers. Docker compose automatically creates a default network through which all the containers can talk to each other

Here's what a docker-compose file looks like:

Development vs Production configs

⏱️ Development vs Production configs: 1:21:36

While developing the app you might have different configurations e.g the bind mounts, and nodemon. These are the things that you would not need in production. But we define our docker-compose file we do add them to our YAML file. So how do configure our app for both development and production?

The answer is very simple. We create 3 docker files:

docker-compose.yml

This would contain configuration common to both production and development.

docker-compose.dev.yml

This would contain the configuration required for development such as dev-dependencies, bind mounts and anonymous volumes

- docker-compose.prod.yml This would contain the configuration required for production

To run the development docker-compose we can do:

docker-compose -f docker-compose.yml -f docker-compose.dev.yml up

This command runs the containers having development configurations. The order of the docker-compose files is important as docker-compose.dev.yml is based upon docker-compose.yml and not vice versa.

Part 2 and Part 3: 1:44:47